How to make Cursor an Agent that Never Forgets and has better project context

Stop explaining the same context over and over. Start building with AI that remembers everything.

Token Limits Kill Flow

Every complex project, same nightmare: token limits.

Cursor is brilliant but forgetful. After hours of explaining architecture and walking through code, you eventually hit the limit and get stuck with three bad options:

- Start a new chat → lose all context

- Micromanage prompts → constant friction

- Summarize to compress → lose critical details

The ".md file hack" doesn’t work either. Project context is always evolving. it can’t live in a static file. You’re stuck manually updating it, it lacks change history, and worst of all, you still have to add more context in chat.

Bottom line: You spend more time managing context than actually building.

🧩 What You Need: A Living Memory Layer

Imagine a memory system that evolves with your project. One that automatically captures and organizes context from:

- 🧑💻 Your conversations with AI tools like Claude, ChatGPT, and Cursor

- 🧾 Technical docs and architecture notes - PRDs, requirements

- 🧑🤝🧑 Team meetings and Slack threads - key decisions, notes

- ✅ Task details from tools like Linear, Jira, or Notion

…and makes it instantly accessible whenever and wherever you need it. No more re-explaining. No more friction. Just flow.

This isn’t about giving Cursor a longer memory. It’s about giving it a living one: a shared, persistent context layer that travels with you across tools, chats, and time.

🔗 Enter: CORE Memory via MCP

Unlike static files, CORE builds a temporal knowledge graph of your work, linking every decision, chat, or document to its origin, timestamp, reason, and related context.

Even better? It’s portable. Your memory works across Cursor, Claude, and any MCP-compatible tool. One memory graph, everywhere.

CORE memory graph

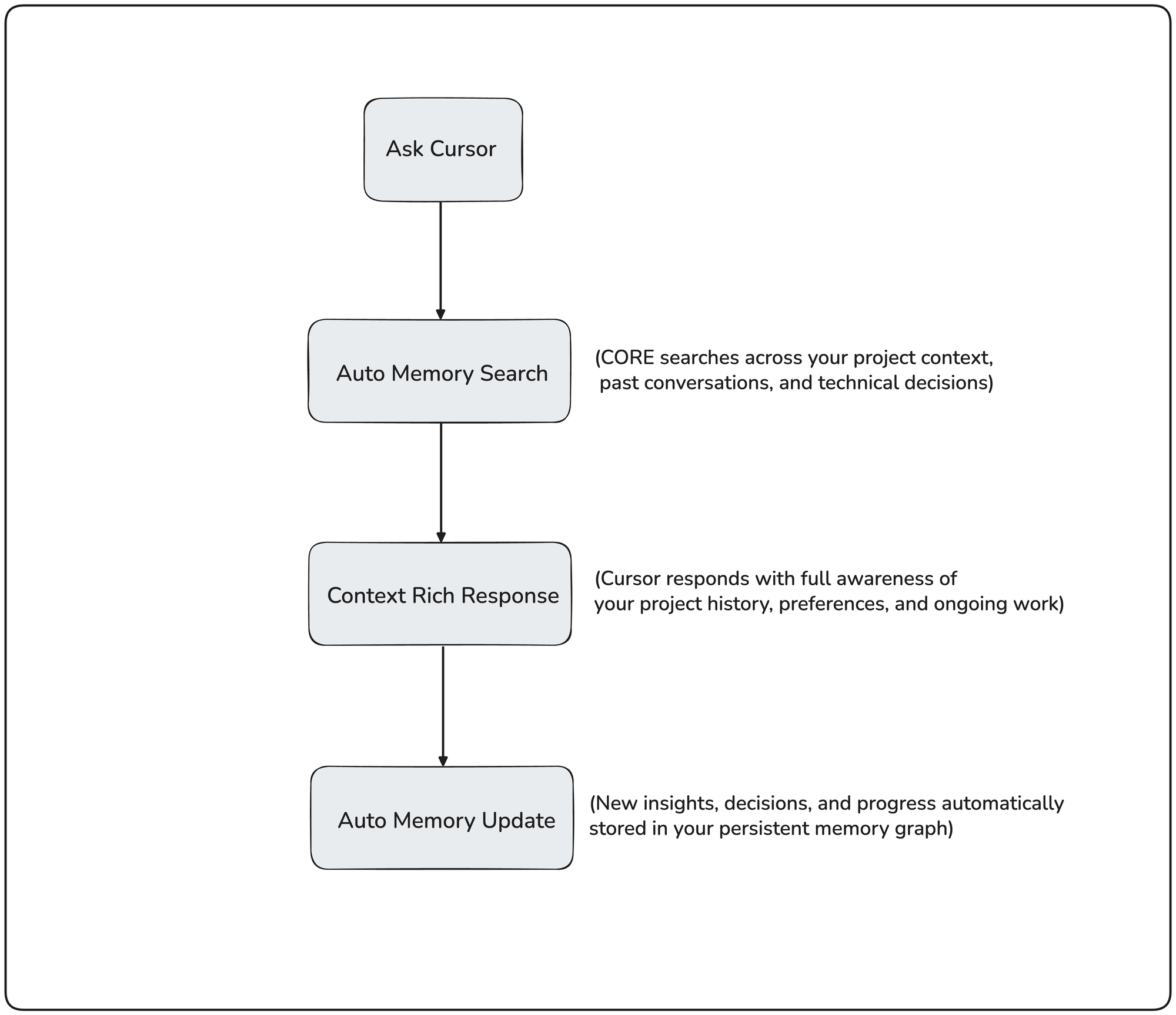

⚙️ How It Works: Cursor + CORE Integration

Start by adding CORE MCP to your Cursor setup, follow this detailed guide.

Once integrated, create a custom rule that transforms Cursor from a stateless assistant into a memory-first agent. You can find the full cursor instruction here.

This simple addition gives Cursor persistent memory, so it remembers your architecture, decisions, and code patterns across sessions.

Here’s what happens under the hood:

Every time you chat with Cursor:

- It searches for direct context, related concepts, your past patterns, and progress status

- It stores what you wanted, why you wanted it, how it was solved, and what to do next

- All insights are added as graph nodes with rich metadata, timestamp, origin, related concepts, and reasoning

searching memory in cursor

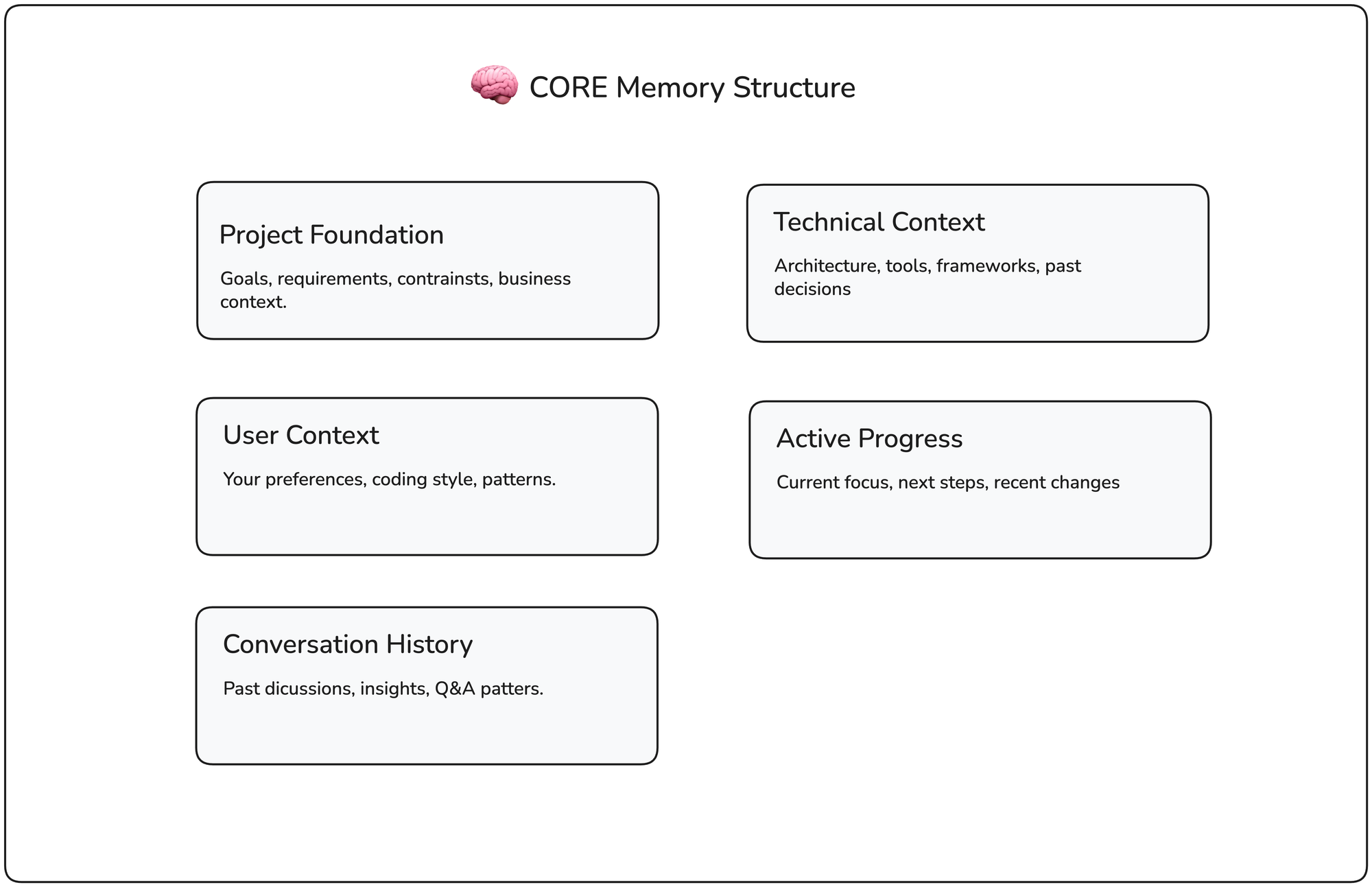

CORE Memory Categories

The instruction ensures that your context is categorised as below and always recalled and updated in the same categories in core memory.

🧾 CORE also Captures Context from Slack, Linear, and More

ORE lives up to its name, it's a living memory that grows with your work.

You can connect tools like Slack and Linear, automatically pulling in messages, issues, and other key updates. This gives Cursor deeper, real-time context across your entire workflow.

add memory from slack messages to core

🔧 Focus on Code. Let CORE Handle the Context

CORE enables you to focus more on coding and providing the right context seamlessly to your agent. Try it out at core.heysol.ai

✨Core is also open-source, check out our repo and give us a ⭐ on GitHub: https://github.com/RedPlanetHQ/core